Know exactly how much you pay to acquire any user: Python with Google API

And that's only one side of the coin. What about how much the campaigns actually cost you? How much are you paying for an active user for example? How valuable would it be to you to know exactly, for each individual user, how much you've paid to acquire them? And combining with the churn rate of your different campaigns, what is the

Customer Lifetime Value of an active user per different campaign, considering the Customer Acquisition Cost?I've been discussing with digital marketing professionals and it seems this many companies do not get to this granular level. Many companies do not have the means to know exactly how much they've paid to acquire a specific user. Although they could. And imagine the possibilities! In Zervant's case for example:

- We were able to identify people coming through our Google display marketing campaigns. We paid very little for those users (in terms of sign-up), but they never became active users. Thus, the cost per active user was still very high. So we cancelled these campaigns.

- We are able to say if a campaign, where we e.g. advertise our paper-as-a-service delivery results in users who send paper invoices. We can calculate how much we pay for each acquired user, how many of them activate, how much they invoice in paper and at what rate they churn. Overall, we will have a detailed idea of how well the marketing message reaches the users who appreciate the paper invoicing and what the revenue is per user. Even if the cost per active user might be higher, if it attracts the right kind of users who benefit from our premium services, their churn might be lower, resulting in a highly favorable CLV/CAC ratio.

Now, to do it the easy way, I would fully recommend having a look at Supermetrics. With Supermetrics you can pull in your marketing spend data into a Google Sheet very easily. No hassle. They will even let you schedule the refresh daily, if you prefer. If you then need to read this data into your analytics database, it's a matter of exporting the Google Sheet. I'm pretty sure it will work ;)

But that's not the path I took. I wanted to query the data myself, directly from the marketing APIs. Why? I'm not sure. But it was worth it I'm sure.

To accomplish this, you need to do the following things:

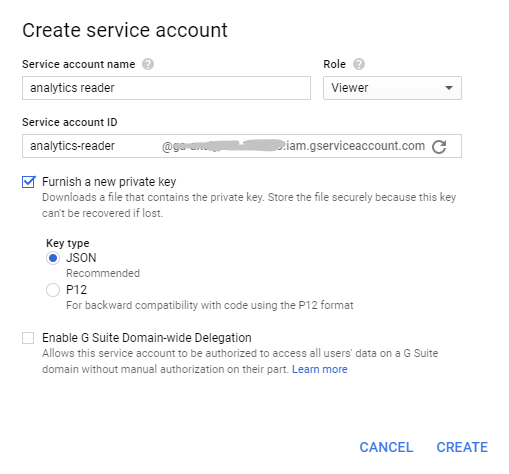

- Create a Google API service account (here).

- Your

VIEW_ID - Some way you will need to grant the service account rights to read Google Analytics data.

The original instruction for this is found here.

Create Service Account

You can create a Service Account on the IAM & Admin portal: https://console.developers.google.com/iam-admin/serviceaccounts

You might need to create a project first. Read more about Google APIs OAuth2 ServiceAccounts here.

VIEW_ID

When on Google Analytics, go to the account selector (top left). When you expand that, you see Analytics Accounts, Properties & Apps and Views. The number below the view you are interested in is your

VIEW_ID.Grant rights

When you try to first time run your script, you will likely get an error such as below:

HttpError 403 when requesting

https://analyticsreporting.googleapis.com/v4/reports:batchGet?alt=json

returned "Google Analytics Reporting API has not been used in project [...] before or it

is disabled. Enable it by visiting

https://console.developers.google.com/apis/api/analyticsreporting.googleapis.com/overview?project=...

then retry. If you enabled this API recently, wait a few minutes for the action to propagate

to our systems and retry."Code

The following sections can be viewed as a full Github Gist here, but is explained in more detail below.Setting up, we import a couple of Google libraries, pandas and datetime.

from apiclient.discovery import build

from oauth2client.service_account import ServiceAccountCredentials

import pandas as pd

import datetime

#SCOPES = ['https://www.googleapis.com/auth/analytics.readonly']

SCOPES = []

KEY_FILE_LOCATION = './path-to-your-private-key-file.json'

VIEW_ID = '123456789'

from_date = datetime.datetime(2017,12,1,0,0,0).strftime("%Y-%m-%d")initialize_analyticsreporting creates the authenticated object that can call the API.get_report calls the API, combines each result page into a list of responsesstrip_ga_prefix just cleans up the column names from the responsesget_dataframe combines the list of responses from get_report into one big pandas dataframedef initialize_analyticsreporting():

"""Initializes an Analytics Reporting API V4 service object.

Returns:

An authorized Analytics Reporting API V4 service object.

"""

credentials = ServiceAccountCredentials.from_json_keyfile_name(

KEY_FILE_LOCATION, SCOPES)

# Build the service object.

analytics = build('analyticsreporting', 'v4', credentials=credentials)

return analytics

def get_report(analytics, query):

"""Queries the Analytics Reporting API V4, combines multiple pageSize

together and returns a pandas dataframe.

Args:

analytics: An authorized Analytics Reporting API V4 service object.

query: the json that describes the query

Returns: a pandas dataframe.

"""

nextPageToken = '0'

responses = []

while (nextPageToken != None):

query['reportRequests'][0]['pageToken'] = nextPageToken

result = analytics.reports().batchGet(

body=query

).execute()

responses.append(result)

if 'nextPageToken' in result['reports'][0]:

nextPageToken = result['reports'][0]['nextPageToken']

else:

return responses

return responses

# The columns from GA come with a ga: -prefix. This simply removes that where it exists.

def strip_ga_prefix(string):

if string[:3] == 'ga:':

return string[3:]

else:

return string

def get_dataframe(responses):

"""Parses the Analytics Reporting API V4 response to a pandas dataframe

Args:

response: An Analytics Reporting API V4 response.

"""

rowlist = []

for response in responses:

for report in response.get('reports', []):

columnHeader = report.get('columnHeader', {})

dimensionHeaders = columnHeader.get('dimensions', [])

metricHeaders = columnHeader.get('metricHeader', {}).get('metricHeaderEntries', [])

for row in report.get('data', {}).get('rows', []):

new_row = {}

dimensions = row.get('dimensions', [])

dateRangeValues = row.get('metrics', [])

for header, dimension in zip(dimensionHeaders, dimensions):

new_row[strip_ga_prefix(header)] = dimension

for i, values in enumerate(dateRangeValues):

for metricHeader, value in zip(metricHeaders, values.get('values')):

new_row[strip_ga_prefix(metricHeader.get('name'))] = value

rowlist.append(new_row)

df = pd.DataFrame(rowlist)

return dfquery = {

'reportRequests': [{

'viewId' : VIEW_ID,

'pageSize' : 10000,

'dateRanges': [{

'startDate' : from_date,

'endDate' : 'today'}],

'dimensions' : [

{'name' : 'ga:date'},

{'name' : 'ga:source'},

{'name' : 'ga:medium'},

{'name' : 'ga:campaign'},

{'name' : 'ga:keyword'},

{'name' : 'ga:adGroup'}

],

"orderBys": [

{'fieldName' : 'ga:adCost', 'sortOrder': 'DESCENDING'},

],

'metrics':[

{'expression' : 'ga:adCost'},],

}]

}analytics = initialize_analyticsreporting()

response = get_report(analytics, query)

df = get_dataframe(response)Google is easy and they have good instructions themselves. I have learned that Facebook and Bing might be trickiers. In the following posts I will give step-by-step instructions for how to get spend data from Bing and Facebook.

Comments

Post a Comment